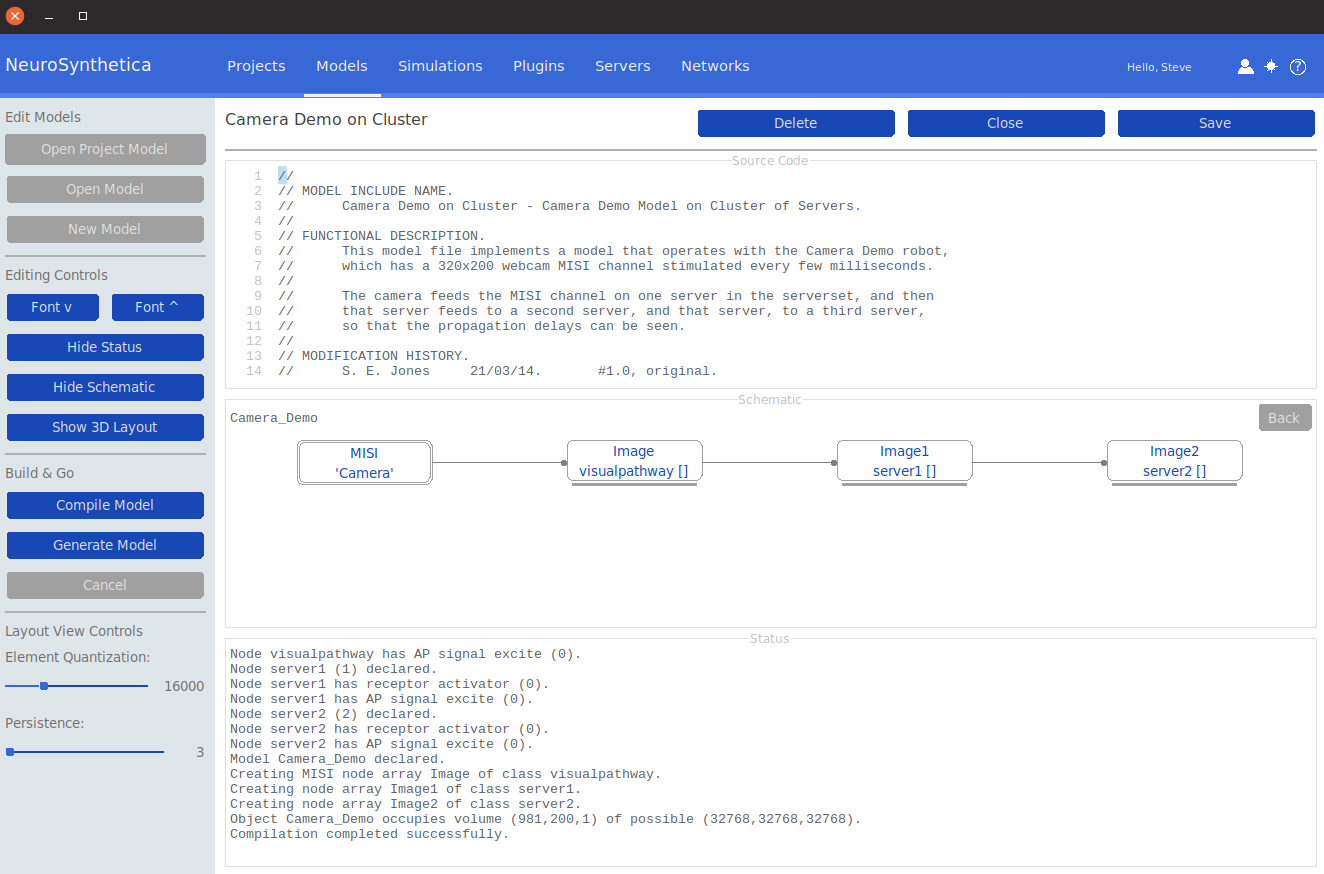

Interactive Model Development

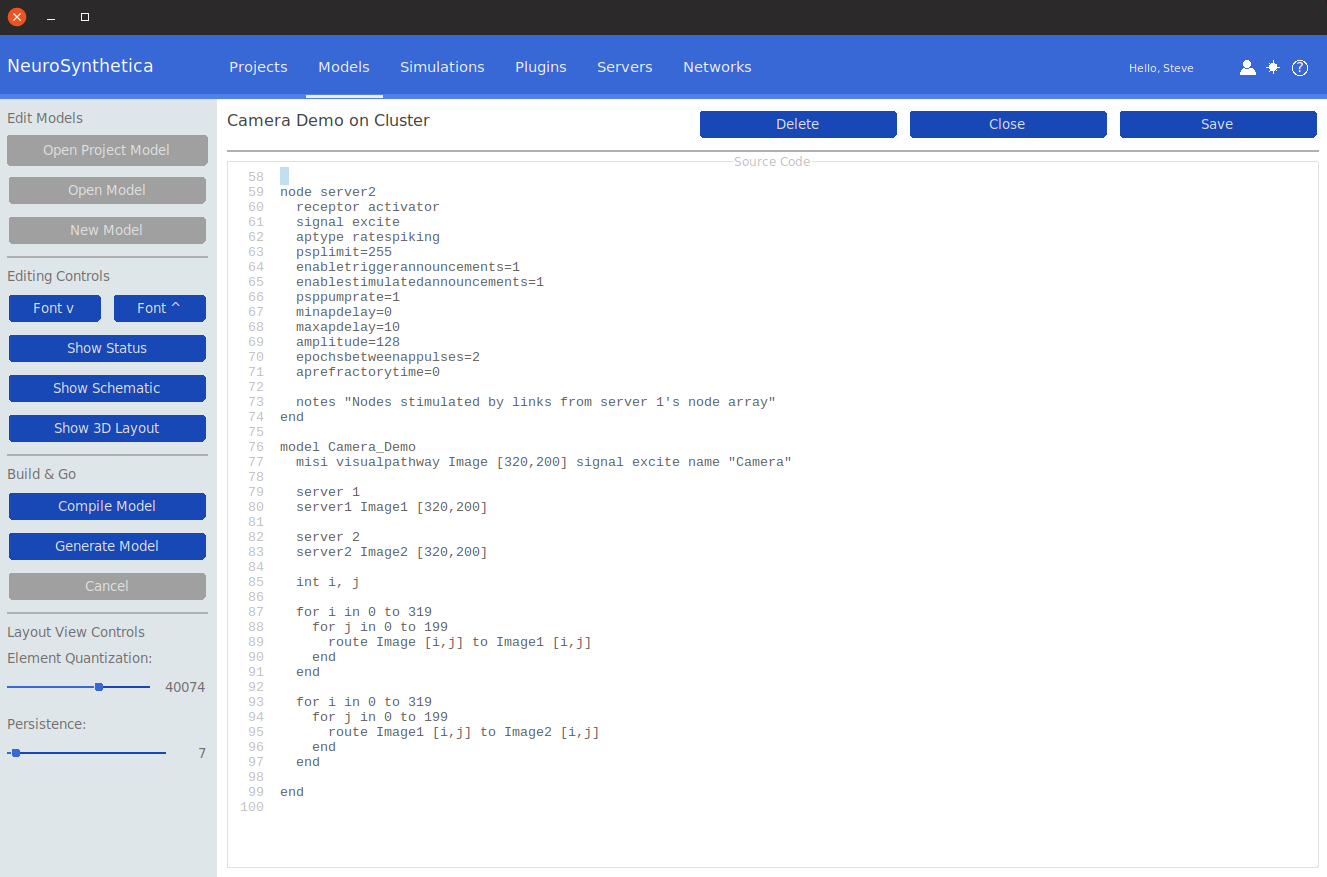

The NeuroSynthetica SOMA™

Modeling Language

is similar to a hardware definition language such as VHDL or VeriLog,

and is used to describe models containing

Nodes and their receptors and

signals, arrays of nodes, objects (similar to a structure in a programming

language), arrays of objects (fabrics), and I/O channels.

Using the Workbench, models can be quickly described in the

NeuroSynthetica SOMA™

modeling language, and compiled, so that they are ready to be built

on a target server set. The resulting compilation can be

visualized in graphical schematic form as well as graphical 3D form.

Nodes, receptors, signals, and objects are instantiated from classes

defined in the language, specifying the operational parameters of the

node or receptor, or the constituent elements of the object. Once defined,

single nodes and objects, as well as 1-, 2- and 3-dimensional arrays of

nodes and objects may be defined in the model based on their defined classes.

Object classes are similar to function definitions in a programming language;

in addition to defined elements, they support for executable statements used

to wire-up their constituent elements. The language supports modern programming

constructs such as FOR, WHILE, IF, variables and expressions, and assignment

statements. The ROUTE statement is used to connect the output signal of an

afferent node to the input of an efferent node. During neurogenesis (after compilation),

these statements execute with high performance, and can create and wire-up

nodes on the server set over a gigabit network at a rate of over 20,000 links

per second.

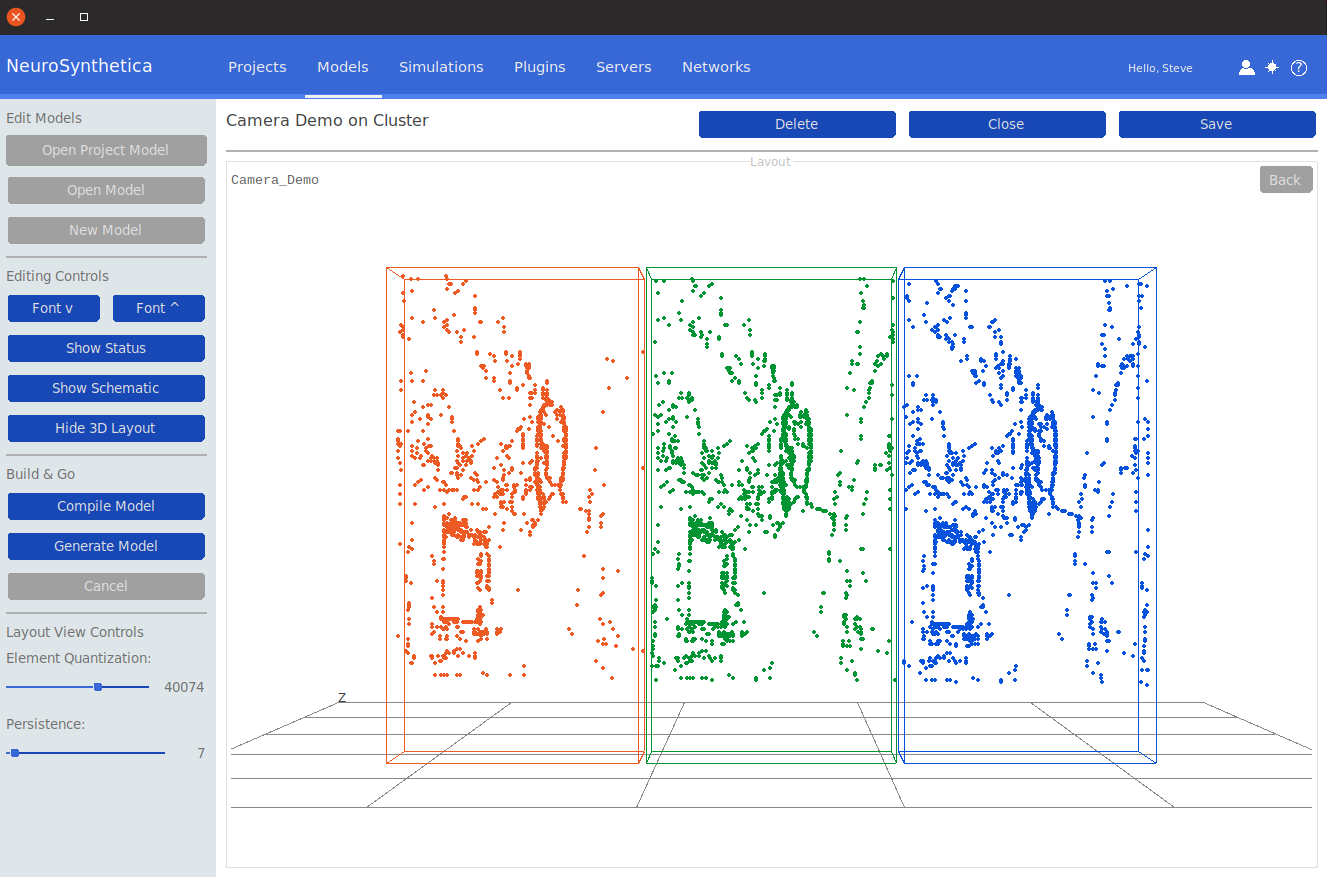

Similar to traditional application programming in a high level language,

NeuroSynthetica's SOMA™

modeling language allows the definition of nodes and other objects

without specifically declaring their coordinates in the 3D model space.

Optional statements give the designer the flexibility to place objects

at relative coordinates to an object class' origin, defining a precise

3D structure that may be replicated in object arrays for the construction

of neurocomputational fabrics.

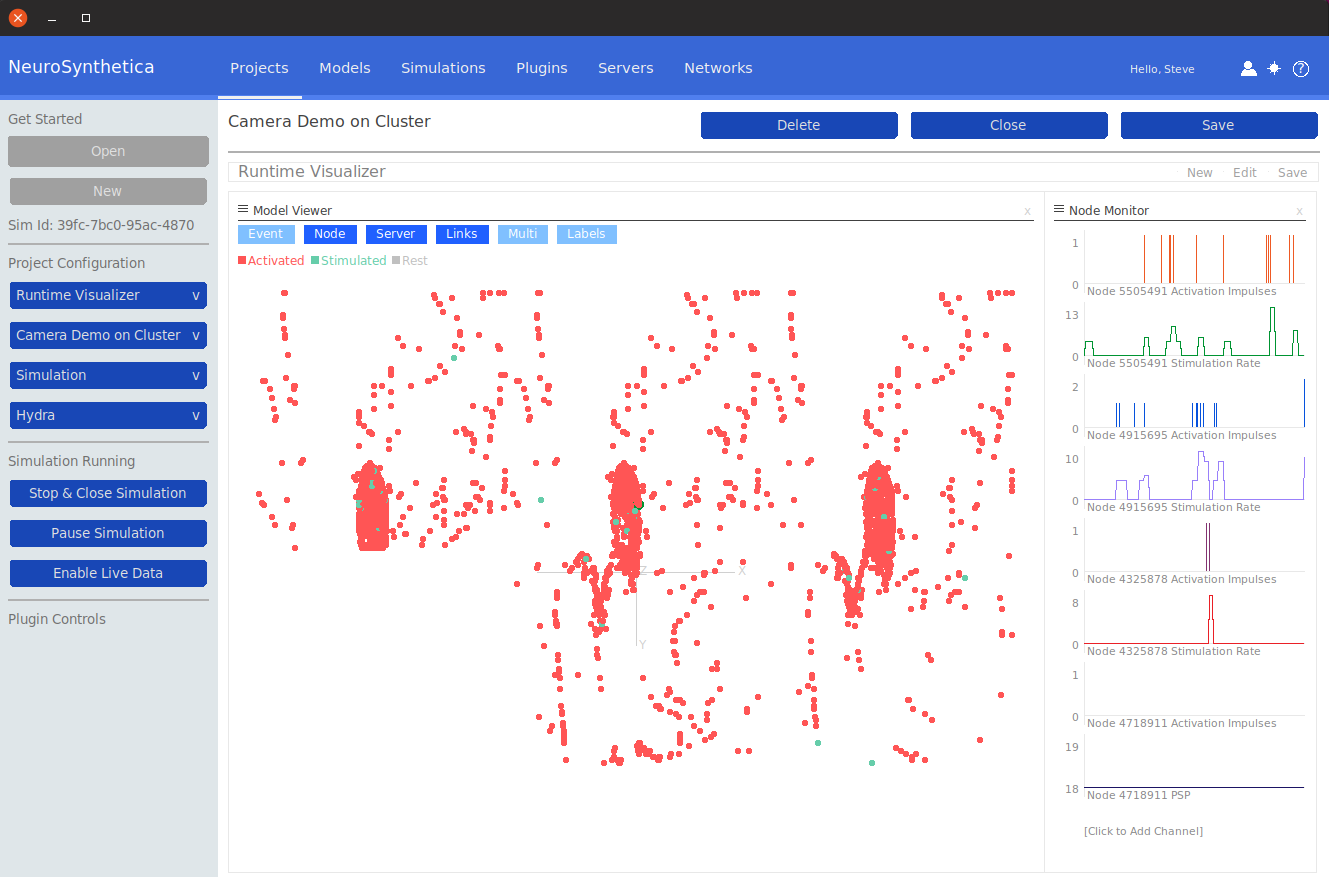

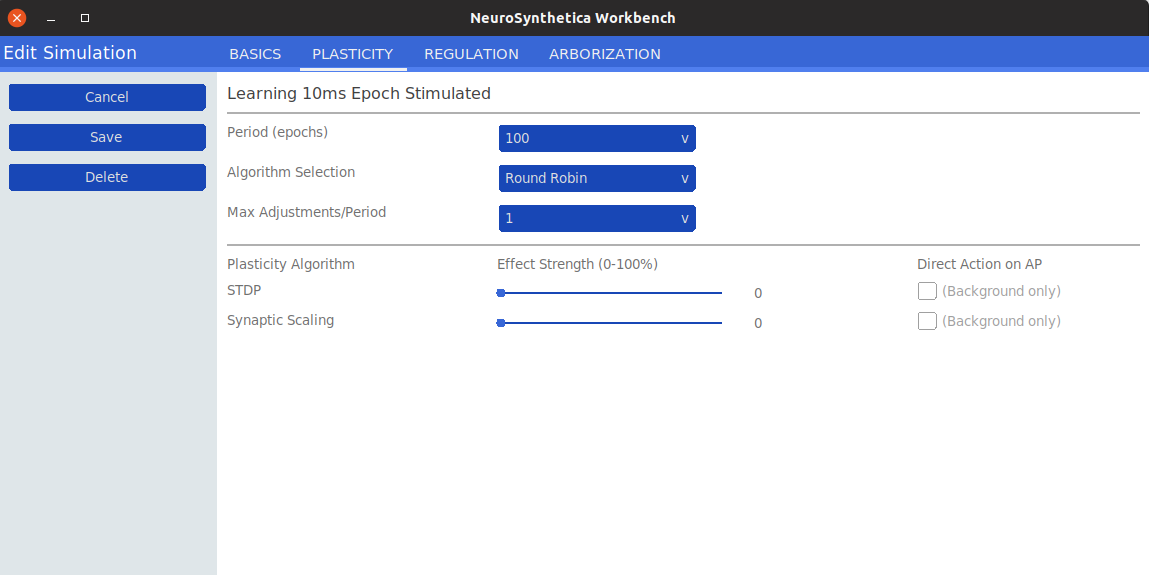

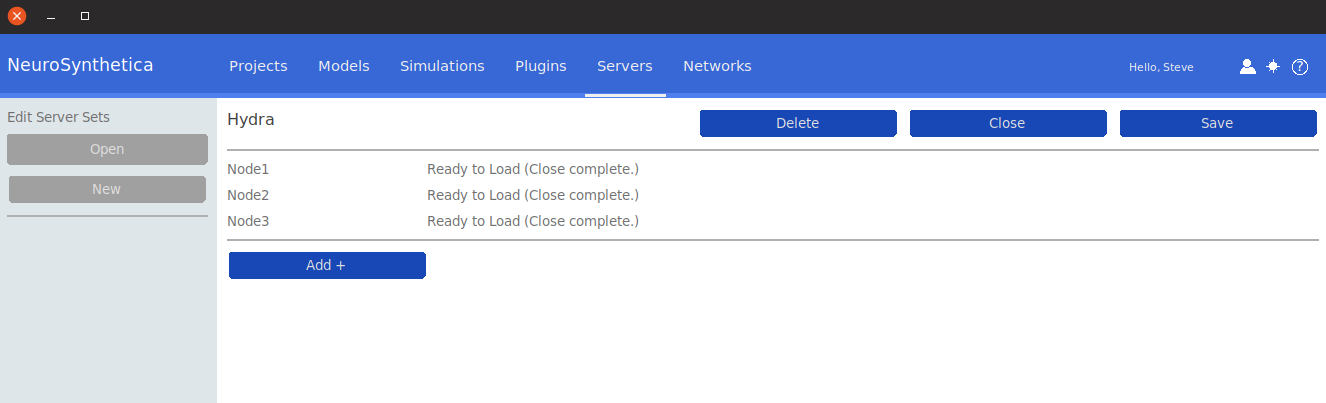

The synthetic brain development cycle is interactive and rapid. First, a

project is declared, defining the server set, simulation type, model name,

and initial dashboard. Then, a model definition source file is created,

which possibly includes predefined class definitions for nodes, receptors

and signals. Then objects, similar to structures but which also contain

the active code described above, are defined, including the top-level

object (analogous to the main() function in C++). One-click compilation

nominally takes under one second, and the resulting schematic and 3D layout

views are readily available from the compiled model.

If syntax errors arise, the developer can correct them in the interactive

environment and recompile, and rapidly get to a successful model build.

Once compiled successfully, the model may be deployed to the server set

with one click.

The user can use the built-in text editor to type-in and edit SOMA language

statements in the model source files, or the Workbench can be configured

to run the user's text editor as desired.